The GenerIA Blog

Rethinking Tokenization: How SuperBPE Breaks the Space Barrier

It just took questioning an arbitrary assumption (the Einstein way) to bring tokenization closer to the reality and overcome a years-long limitation in one of the fundamental layers of the NLP stack.

Based on the principle of "Garbage in, garbage out", tokenization is one of the most critical stages in building language models. Yet, it is typically taken for granted. For nearly a decade, subword-level tokenizers like Byte-Pair Encoding (BPE) have followed a simple but rigid rule: never cross word boundaries. Spaces were the universal semantic separator. But a team from the University of Washington, NVIDIA, and the Allen Institute for AI has challenged that assumption [1] — and the results are remarkable.

Their method, SuperBPE, introduces a simple modification: allow tokens to span across whitespaces. This change cuts token counts by up to 33%, reduces compute by 27%, and boosts downstream accuracy by 4% — all by tweaking a constraint that's been in place ever since natural language processing took a giant leap forward with the famous Attention is All You Need paper [2] by Google scientists, and the ensuing transformers models.

Word Boundaries Don't Always Make Sense

Traditional tokenizers treat whitespaces as semantic walls, assuming that each word is a standalone unit. But that's not how we humans interpret language. We understand expressions like "by the way" or "New York City" as single semantic chunks.

This die-hard reflex is symptomatic of the English bias in the way natural language processing is done on a daily basis. In other languages, most notably the agglutinative ones like Turkish or those that favor word compounding like German, the relative role of whitespaces is clearer. Compound words like Weltanschauung ("Vision of the world" in German) just don't rely on spaces at all. Language models trained on such inputs already feature an adaptive flexibility. So why should tokenization for languages like English or French assume spaces are sacrosanct?

How SuperBPE Works

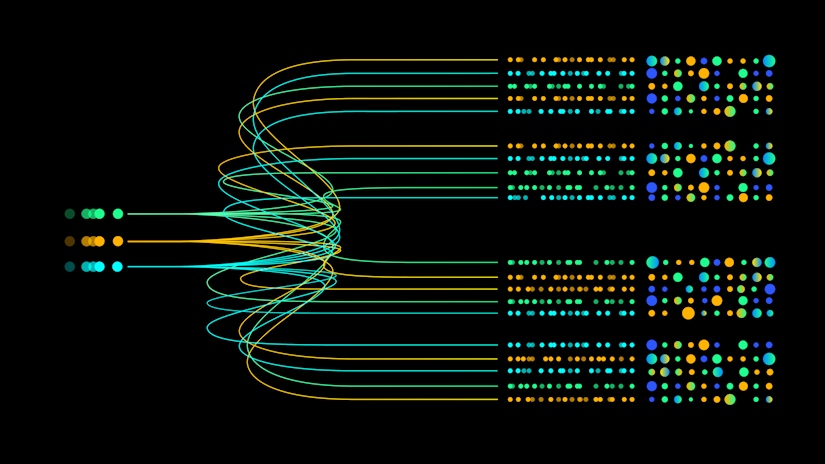

SuperBPE doesn't throw out BPE — it extends it. To do so, the tokenization algorithm proceeds in two stages:

- Initial phase: standard BPE runs on text (respecting whitespaces) up to a vocabulary size t.

- "Super" phase: beyond that, training continues without respecting whitespaces, allowing tokens to span across words.

This transition allows the tokenizer to start with word-level understanding and evolve toward capturing frequent multi-word expressions. As vocabulary grows, the model gets more semantically coherent. Expressions or phrases like "The Big Apple" become a unit, not a lambda sequence of discrete, semantically disconnected words.

Why This Matters: Efficiency and Accuracy

With SuperBPE, fewer tokens are needed to represent the same text more precisely. That's not just elegant from an engineering standpoint. It is computationally and therefore environmentally impactful. For example, according to the authors, SuperBPE achieves 49% higher encoding efficiency (bytes per token) compared to standard BPE at scale.

The research team trained 8B-parameter models from scratch, changing nothing but the tokenizer. SuperBPE models outperformed their BPE counterparts — winning on 25 out of 30 proposed tasks — while using less inference compute. On benchmarks like CommonsenseQA [3] and ARC-Easy [4], performance gains exceeded 20%.

Under the Hood

SuperBPE balances the tokens space. When traditional BPE over-represents trivial tokens ("the", "of"...), SuperBPE linearizes the distribution by learning compositional phrases as atomic units. This mirrors how humans process language: not word by word but semantic units by semantic units.

As a logical result, models allocate capacity more effectively, avoiding wasted effort on easy predictions while improving generalization on harder tasks and doing quite better on figuratively heavy inputs.

Practical Impacts and the Road Ahead

For once in the deluge of recent NLP- and AI-related papers, SuperBPE isn't just an academic curiosity. It delivers smaller token counts, which translates into faster inference and lower memory use. It delivers higher efficiency per parameter, meaning better models without scaling up. And, incidentally, it supports extended context - i.e. longer documents within the same token limit.

The big win here may appear mainly conceptual, in that we should stop blindly respecting the spacebar. But make no mistake: in our continuing pursuit of more efficient and capable language models, smarter tokenization strategies like SuperBPE become foundational. Which brings us to the next questions:

How does cross-token modeling interact with multi-token prediction strategies?

Can similar techniques benefit multimodal models operating over both text and vision?

References

[1] A.Liu, J. Hayase, V.Hofmann, S. Oh, N.A. Smith, Y. Choi, SuperBPE: Space Travel for Language Models

[2] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, I. Polosukhin, Attention Is All You Need

[3] A. Talmor, J. Herzig, N. Lourie, J. Berant, CommonsenseQA: A Question Answering Challenge Targeting Commonsense Knowledge

[4] P. Clark, I. Cowhey, O. Etzioni, T. Khot, A. Sabharwal, C. Schoenick, O. Tafjord, ARC (AI2 Reasoning Challenge)

In the GenerIA blog: